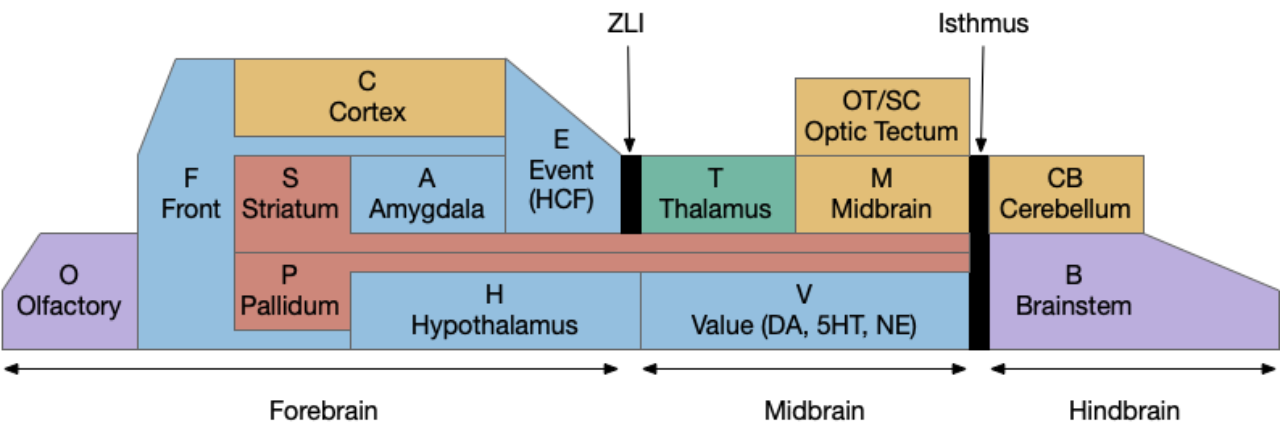

My initial idea for essay 13 is to explore the chimaeral model [Tosches, 2013], which models the bilaterial mind as composed of two poles, an apical pole around chemosensory, peptides and possibly a more broadcast model, and a motor pole around central pattern generators and muscle control.

The apical pole is based on the larvae of many marine animals, zooplankton. Platynereis for example is a marine worm with a ciliated larva that follows the apical plan. The cilia is important, because cilia and muscles have very different control needs. Cilia can work largely independently, or in a self-organizing fashion where neighboring cells beat together. Any control system is intrinsically modulating, not directly controlling. In contrast, muscles require a nerve net to function, because of the need to coordinate the contraction of many muscle cells.

I’m hoping that exploring the apical model of modulating downstream systems will also counterbalance the natural inclination to imagine neural control as a micromanager controlling every individual muscle or gland, or if not micromanaging, then delegating in a strict naive hierarchical fashion.

Programming model meta

A question with such a simple animal with behavior that’s easily programmed, is what’s the value of a programming model, when it’s easily solved? Or, perhaps better, what kinds of models might be useful?

This problem is particularly an issue for an essay model, because it has more resemblance to old-style AI, which runs into the problem of wishful mnemonics [McDermott 1976], where the naming of variables and functions substitutes for any actual insight.

For example, some future essay might model hunger in the hypothalamus:

pub struct Motivation {

hunger: f32,

...

}

fn choose_goal(motives: &Motivation) {

if motives.hunger > 0. {

find_food();

}

}But all the work here is in the naming: goals, motivation, even the base “hunger,” which is simply a floating point number. The model itself is trivial; all the work is in the illusion given by pointed naming. McDermott suggests replacing all variables with generated symbols like G0019, and seeing if the model still works.

So, where is the value? Not as a solution, because the solution is trivial. But I think the model can have value as an executable thought experiment, like a diagram, not as a solution. The advantage of a program is that it can catch handwaving assumptions because you have to make decisions to write a program.

Programming infrastructure: ECS and ticks

The essay models use a loosely coupled system with a tick by tick simulation. The loose coupling forces communication to be an important issue, because neural fibers and connectivity is as important as grey matter. The ticking simulation forces time to be a fundamental issue.

For a base engine, the essays use an ECS (entity, component, system) based on the Bevy game engine. Each tick runs all the systems, which each run independently, using multiple threads where possible.

A setup phase registers the systems with the ECS engine. For example, a pressure-sensing neuron in the zooplankton might register with the application as follows, where sense_pressure is a function:

app.system(Update, sense_pressure);The simulation proceeds with ticks to execute all the systems, called as follows:

app.tick();Because of the loose coupling, the model isn’t a single algorithm but a collection of independent systems that each influence the whole.

A sensing neuron might look like the following:

fn sense_pressure(

body: Res<Body>,

mut peptides: OutEvent<Peptide>

) {

if body.pressure() > 0.5 {

peptides.send(Peptide::Pressure);

}

}Here the body represents the physical model, so the sensory neuron is receiving pressure from the POV of the body, as opposed to knowing about the broader world. If it senses sufficient water pressure, it broadcasts a peptide as a message.

Note that the sensor neuron doesn’t know what the peptide will do. Although in the full model, the pressure peptide will cause the cilia to beat faster, swimming up, that knowledge is outside of the sensor.

In this example, the pressure sensor is a unique neuron so there’s no need to have an individual component representing it, but a more complicated animal would have specific components for each physical sensor.

References

The Bevy game engine. https://bevyengine.org/

D. McDermott. Artificial intelligence meets natural stupidity. ACM SIGART Bulletin, (57):4–9, 1976.

Tosches, Maria Antonietta, and Detlev Arendt. “The bilaterian forebrain: an evolutionary chimaera.” Current opinion in neurobiology 23.6 (2013): 1080-1089.